How to Create a Custom Copilot Using Microsoft Creator Tools

Learn how Copilot chatbots can deliver better experiences to your customers

Conversational chatbots aren’t a new UI paradigm; many large consumer companies have provided chatbot support agents—with varying degrees of success.

Now you can use new Microsoft tools to create custom Copilots so your users can easily interact with enterprise data and services.

Now you can use new Microsoft tools to create custom Copilots so your users can easily interact with enterprise data and services.

What’s a Copilot?

A “Copilot” generally incorporates the following features and capabilities:

- A conversational (chatbot style) user interface.

- Features that help users access application or data functionality through natural language queries or instructions.

- Use of Large Language Models to generate natural-language responses to prompts.

- Data input and output that is usually text, but may be multi-modal, e.g. accepting input and providing output via text, image, sound and/or video.

Microsoft released a wide array of conversational UI applications (called Copilots) to help users use its own products, such as Microsoft 365, Dynamics CRM, GitHub and more. Microsoft develops Copilots as companion apps or as integrated features within its products.

In parallel, Microsoft has released Copilot development tools to enable its customers to create their own custom Copilots. These low-code and pro-code developer tools allow you to create and deploy your own Copilots for your customers.

Custom Copilot Development

Historically, creating LLM-backed chatbots (Copilots) has been software developer-intensive, requiring custom programming of the front-end (chat UI), back-end (LLM and data retrieval), as well as conversation state for multi-turn Copilots (logic layer).

As the Generative AI tooling market has evolved over the last two years, powerful tools have emerged from a wide range of sources that reduce the engineering effort required to develop and deploy a Copilot-style solution.

LangChain provides widely used open-source state management, and retrieval augmented generation (RAG) nitration and LLM SDK tools.

- OpenAI introduced tools allowing anyone to create “Custom GPTs” hosted in its app store.

- Enterprise providers like Microsoft and others provide tooling to their customers to enable Copilot development to augment internal and customer-facing applications.

Microsoft’s Copilot Development Tools

Microsoft’s development tools for custom Copilots include both “low code” and “pro code” development tools. While it may seem confusing that there are multiple options to create custom Copilots, each alternative has its own ideal use case, and selecting the best option becomes relatively straightforward when considering functional requirements, developer skill sets and deployment needs.

Microsoft’s development tools for custom Copilots include both “low code” and “pro code” development tools.

In this article I’ll contrast two development platforms Microsoft provides for fully customized Copilot applications: Copilot Studio and Azure ML Prompt Flow.

Copilot Studio

Copilot Studio is the evolution of Microsoft’s Power Virtual Agents, which itself is the conversational UI component within the Microsoft Power Platform. As a Power Platform solution, it’s generally designed to be a “no code/low code” solution, where developers configure applications in high-level tools, and deploy them in a SaaS model.

Copilot Studio is highly geared toward creating applications leveraging other Microsoft products, such as Dataverse, Open AI Services, Azure SQL DB, etc. to provide process automation and workflow solutions.

Azure ML Prompt Flow

Azure ML Prompt Flow is part of Microsoft’s PaaS AI/ML PaaS offerings and follows a model development/model deployment paradigm. It assumes Copilot developers have skills in machine learning, Python programming and are able to manage PaaS resources such as RESTful web endpoints.

Prompt Flow is geared toward helping integrate machine learning, generative AI and AI services into custom solutions that allows a high level of customization and flexibility.

Copilot Studio and Prompt Flow Feature Comparison

|

|

Copilot Studio |

Azure ML Prompt Flow |

|

Developer Experience |

No-code, configurable component paradigm. |

Highly customizable component assembly. |

|

Assumes coding skills? |

No. Components are generally assembled via drag & drop with property sheet configuration. |

Yes. Components are highly customizable via Python code snippets. |

|

Developer Flexibility |

Microsoft provides components to integrate with a vast array of its services. |

Completely open. Pre-built components can be mixed with custom code where needed to meet virtually any requirement. |

|

Manages conversation state |

Yes |

Yes |

|

LLM Integrations |

Yes |

Yes |

|

Generates front-end UI |

Yes, standardized UI hosted by Microsoft as a web component. |

No, deployed solutions are REST endpoints integrated with customer provided UI. |

|

Customizable UI |

Limited |

Yes |

|

Deployment Method |

SaaS deployment billed based on level of user interaction with the deployed Copilot. |

Deployed as an Azure endpoint, billed based on configured compute instance size. |

|

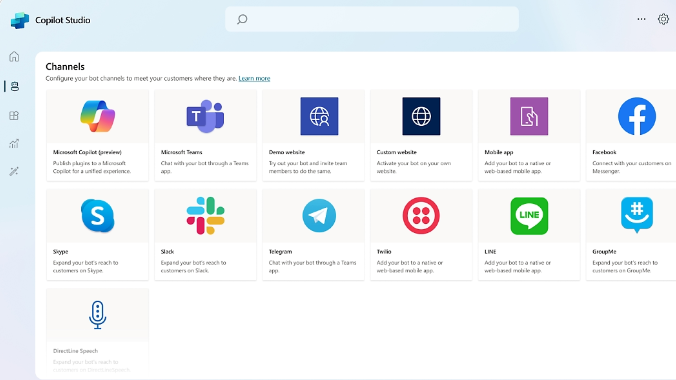

Deployment Channels |

Preconfigured Channels: Teams, Custom Website, Mobile App, Facebook, Azure Bot Service Channels |

Any that can consume a REST endpoint; integration with channel is developer responsibility. |

|

Administrative Requirements |

None. SaaS product; Microsoft manages scaling and availability. |

Some. Deployment is point & click but scaling & sizing is developer-specified. |

When Should You Consider Copilot Studio?

Some guidelines for selecting Copilot Studio:

Copilot Studio is an excellent choice for creating custom Copilots using low/no code, and especially when the Copilot data sources and processes are part of the Microsoft ecosystem (Dataverse, Dynamics, SharePoint, etc.)

- Copilot Studio is especially geared toward process automation and workflows, which isn’t surprising given its Power Apps DNA.

When Should You Consider Azure ML Prompt Flow?

- Azure ML Prompt Flow is geared toward providing Copilots that leverage ML/AI Models, and especially LLMs such as OpenAI and others available from the Azure ML model catalog.

- While the collection of pre-built widgets in Prompt Flow is far less than Copilot, the ability to incorporate custom data sources, procedural logic and external integrations is virtually unlimited. Almost any feature that can be imagined in Python can be part of a Prompt Flow solution.

- The back-end integrations, logical Copilot flow and state management developed using Prompt Flow is deployed as a web endpoint (REST). While it’s the creator’s responsibility to integrate the REST endpoint with the end-user experience, there aren’t limitations of how or where the prompt flow functionality is ultimately delivered.

- The UI/UX of how a Prompt Flow Copilot is experienced is entirely configurable. A Prompt Flow experience could be completely embedded within an existing custom application, for example.

A Brief Mention of Azure AI Bot Service

In the article above, I intentionally didn’t cover a third option: Azure AI Bot Service (Bot Service). Bot Service can be thought of as the “pro code” sibling of Copilot Studio. The two have areas of interoperability d overlap—for example Copilot Studio solutions can be deployed to the Bot Service Channels.

Some guidance on when to use Bot Service:

- If the application requirements align more with Copilot Studio (workflow and process automation), but more “pro code” approaches or advanced logic are needed to meet requirements, consider including Bot Service as part of the solution.

- Studio should be considered first and used unless application requirements require more customization.

Large Language Models are revolutionizing how applications are delivered to users

Today we can leverage LLMs to provide high-quality natural language interfaces to almost any application cost-effectively.

In the past, providing natural language user interfaces has long been challenging, expensive, and required specialized skills. Today we can leverage LLMs to provide high-quality natural language interfaces to almost any application cost-effectively.

While the LLMs themselves provide the engine that provides input and output with natural language, we also need to provide state management, data sources and process integration with a Copilot solution. Fortunately, the middleware and development tooling to create high-quality Copilot solutions is available, cost-effective, and is accessible to creators of all skill levels.

Rob Kerr is VP, Artificial Intelligence at DesignMind.

Learn about DesignMind's AI and Data Science solutions, including AI Strategy and Roadmaps, Machine Learning and AI Model Development, and Large Language Models (LLM).